There is nothing worse than planning an experiment from scratch, having your team create an immaculate mockup and develop a test, only to realize a week later – after it has been launched that your secondary metrics were not tracking any conversions. Perhaps you notice that a potentially successful hypothesis was wasted because your cookie consent notification overlapped your new pop-up or, even worse, that your checkout funnel is now broken.

Quality assurance testing is frequently overlooked, as it is the last stage of the development process, and on-time delivery is frequently prioritized over quality. Yes, your stakeholders might be pleased with your punctuality, but final users will be disappointed by a bad experience.

Making Science has been helping out clients with their CRO efforts for the last 5 years and have realized that running successful A/B tests requires meticulous planning and execution, and validating the quality of your experiments is paramount for accurate results and meaningful insights. Over the years, we have found in VWO a powerful testing and personalization platform, equipped with useful tools for QA and pre-launch validations.

Here are some key QA best practices to incorporate into your VWO workflow:

1. Pre-Launch Checks:

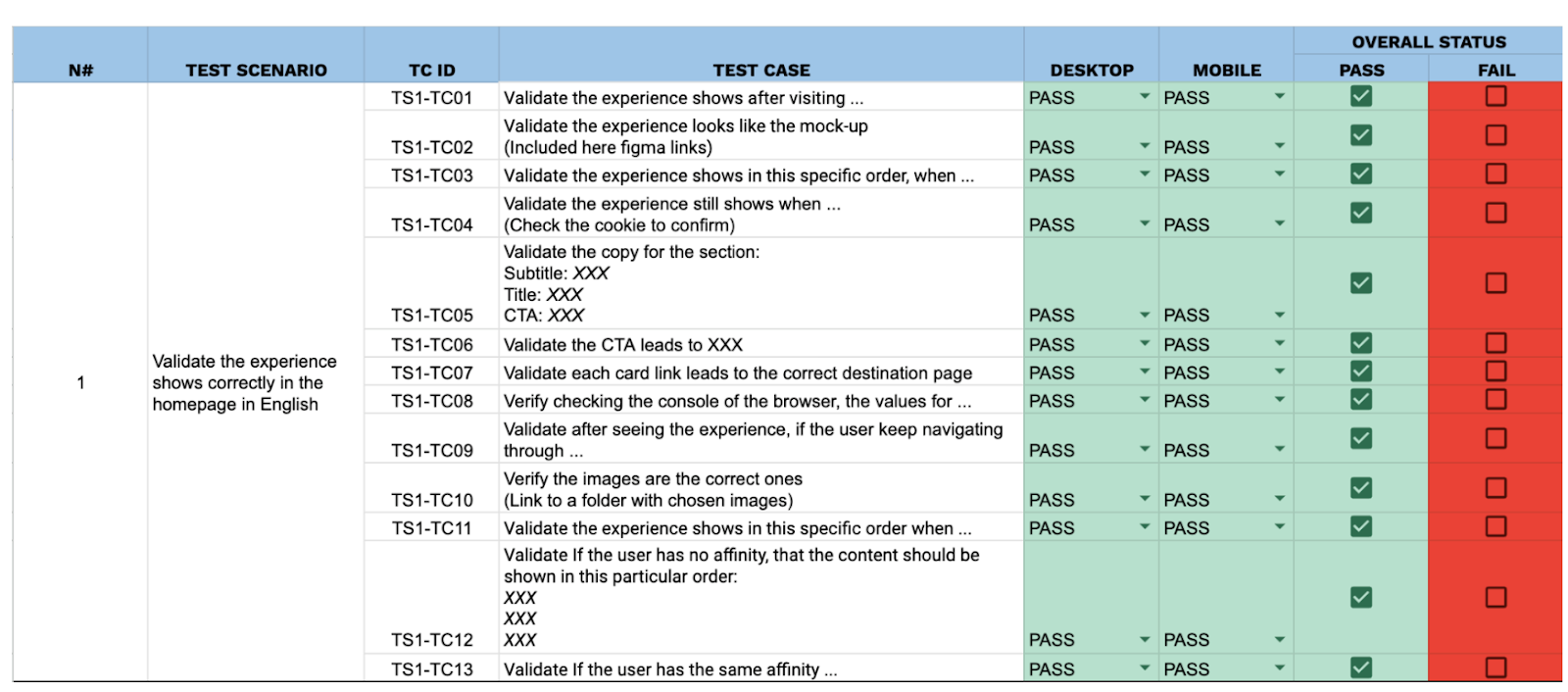

Documenting pre-launch QA is key, as you’ll have evidence and be able to map and visualize all the possible scenarios and actions a user could perform when interacting with the experience. Creating a detailed test plan file also helps optimize the bug reporting and fixing process, as you share your findings with the development team in a centralized report.

Img. 1. Test plan example

- Thorough Visual Inspection:

○ Cross-browser/device testing: Verify consistent appearance and functionality across different browsers (Chrome, Firefox, Safari, Edge), operating systems (Windows, macOS, iOS, Android), and screen sizes (desktop, tablet, mobile).

○ Check for broken images, misaligned elements, and unexpected CSS/JavaScript conflicts.

- Functional Testing:

○ Test all variations thoroughly: Ensure all elements within each variation function as expected (e.g., buttons click, forms submit, animations play correctly).

○ Test all user flows: Simulate user journeys within each variation to identify any roadblocks or unexpected behavior.

- Data Layer Validation:

○ Confirm that all relevant events and data are being captured correctly in the dataLayer to ensure accurate tracking of conversions and other key metrics.

○ Use browser debugging tools (e.g., Chrome DevTools) to inspect the dataLayer and verify its contents.

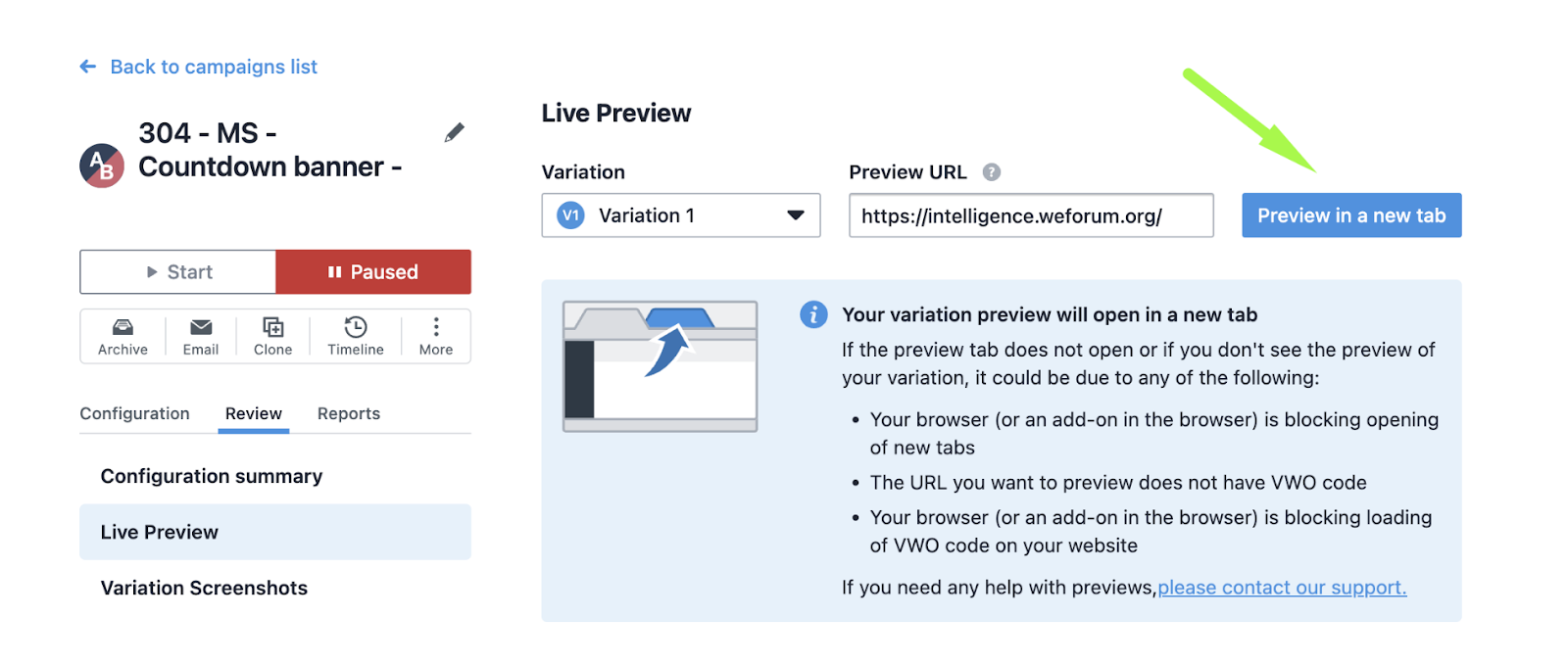

VWO offers a Live Preview feature, offering a safe way to test and pre-visualize an experience, just by adding the URL you want to preview:

Live Preview feature location in VWO

2. During Experiment Run:

- Regular Monitoring:

○ Monitor key metrics closely: Track conversion rates, traffic distribution, and other relevant metrics daily to identify any anomalies or unexpected trends. With VWO, you can use existing metrics or create new ones as guardrails. When you choose a metric as a guardrail, it’s automatically added as a secondary metric in your campaign. This lets you track it along with your main success metrics.

○ Use VWO’s built-in reporting and analytics features to analyze experiment performance. - Address Issues Promptly:

○ If any issues are identified (e.g., technical errors, unexpected traffic fluctuations), address them immediately to prevent data contamination.

○ Make necessary adjustments to the experiment setup or targeting rules.

3. Post-Launch Analysis:

- Data Validation:

○ Scrutinize the collected data for any inconsistencies or outliers (e.g., Verify your 50/50 split A/B test is actually delivering each variation to half of the traffic)

○ Verify that the data accurately reflects user behavior and experiment performance.

- Statistical Significance:

○ Ensure that the statistical significance of the results is sufficient to draw meaningful conclusions.

VWO’s SmartStats uses Bayesian sequential testing to give you more flexibility in running A/B tests. You can adjust the statistical settings to suit your business goals and get faster, reliable results that you can confidently act on. With VWO, you can set an MDE value to ensure your tests focus on finding meaningful improvements. This is especially useful for low-traffic pages, as it provides efficient use of resources without spending time on tiny, unimportant changes. VWO’s stats engine also uses ROPE to recommend stopping a test early if the variation won’t make a big enough difference, saving you time and effort. - Documentation:

○ Document all aspects of the experiment, including hypotheses, setup, results, and learnings.

○ This documentation will be valuable for future reference and optimization efforts. - Tools and Resources:

○ VWO’s built-in testing tools: Utilize VWO’s features for visual testing, browser compatibility testing, and dataLayer debugging.

○ Browser developer tools: Leverage browser developer tools (e.g., Chrome DevTools) for inspecting elements, debugging JavaScript, and analyzing network requests.

By implementing these QA best practices, you can significantly improve the quality of your VWO experiments, increase the reliability of your results, and maximize the impact of your CRO efforts.

Cookies configuration

Cookies configuration